Hiring in AI-native startups looks very different from the traditional tech playbook. Founders are experimenting with new ways to test candidates, balance efficiency, and adapt to an environment where expectations for productivity have skyrocketed. After speaking with dozens of AI startup founders, I found some patterns. Here are the emerging practices, challenges, and trade-offs shaping recruitment today.

Today’s AI Hiring Landscape (September 25, 2025)

AI startups are navigating a hyper-competitive talent market shaped by rapid tech evolution, economic uncertainty, and workflow disruption. As of Q3 2025, hiring remains the top bottleneck for 62% of founders—ahead of funding (45%) and scaling (38%), per Lightspeed and Bessemer surveys. Core team hires take 6+ months due to comp mismatches, while AI slashes entry-level needs by 23–50%. AI Research Scientists are the hardest to recruit. Founders on X echo the sentiment: “Hiring talent” is the No. 1 pain point, with ego-driven misfits derailing early traction.

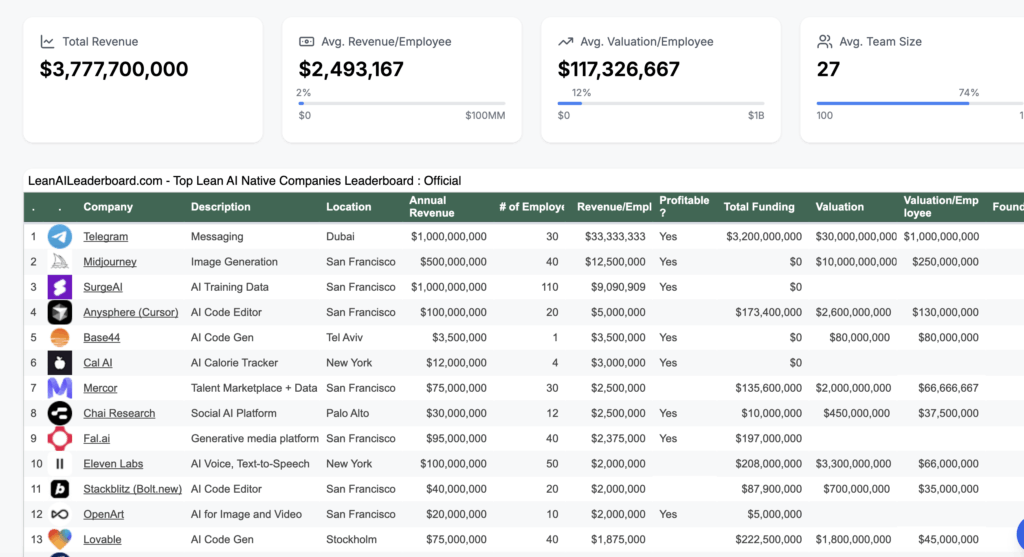

Rethinking Headcount

Traditional org charts collapse at 50–100 people. Leaders now scale teams with AI, not headcount. Firms on the Lean AI leaderboard (https://leanaileaderboard.com/) average 27 employees with >$2.49M annual revenue per head—raising the bar for human hires.

Partners or Employees?

Early-stage startups often blur the line. Founders seek equity-heavy partners (e.g., founding engineers with 1–2% equity) over employees, especially pre-Series A. This attracts risk-tolerant talent but can create friction—partners demand autonomy, employees fit structured scaling. Post-Seed, most (70% of YC AI batch) shift to employees for compliance and IP control. Cultural misfit is a key risk: 18% of early-stage failures trace back to “ego issues.”

Which Roles Are in Demand?

Besides AI Researchers, lean teams focus on high-impact roles:

- AI/ML engineers (model + infra)

- Data scientists (insights)

- Full-stack devs (deployment)

Emerging: prompt engineers, AI ethicists, MLOps specialists. Vertical AI firms (e.g., biotech) need domain experts; horizontal (e.g., copilots) lean generalists. Shortages are sharpest in ML engineers (+40% demand) and AI Product Managers.

| Role Category | Early-Stage Priority | 2025 Demand Growth |

| Engineering (AI/ML) | Model dev, infra | +45% |

| Product/Data | Ideation, validation | +30% |

| Ops/Governance | Deployment, ethics | +25% |

Defining Roles and Outcomes

Job descriptions are shifting from tool checklists to outcome-based scopes:

- “Ship AI prototypes in <2 weeks”

- “Collaborate on ethics reviews”

- “Design agentic workflows”

Testing mirrors this shift: candidates during an interview may be asked to refactor AI-generated code or design workflows under real-world constraints.

Experience: Asset or Liability?

Experience is valuable for judgment (architecture, tradeoffs), but rigidity is a liability. 71% of new founders prefer AI-fluent juniors over “2022-style” seniors. But more companies value new kinds of Senior Engineers. As Andrew Ng put it: fundamentals + AI tools > legacy syntax. Seniors who adapt thrive; those with anti-AI mindsets stall.

Quote from Andrew Ng: “There is a stereotype of “AI Native” fresh college graduates who outperform experienced developers. There is some truth to this. Multiple times, I have hired, for full-stack software engineering, a new grad who really knows AI over an experienced developer who still works 2022-style. But the best developers I know aren’t recent graduates (no offense to the fresh grads!). They are experienced developers who have been on top of changes in AI. The most productive programmers today deeply understand computers, how to architect software, and how to make complex tradeoffs — and who additionally are familiar with cutting-edge AI tools.”

Today 1 senior + AI ≈ output of 4 engineers. Critical for speed and oversight.

On the other hand, as AI is wiping out almost half of the entry-level tasks, should companies just skip hiring juniors and only bring in seniors? There is a catch: if you stop hiring juniors today, you’ll end up with a shallow mid-level talent pool in just a few years. By 2030, that gap could become a serious problem.

The smarter play isn’t to eliminate juniors, but to reimagine their roles. Instead of cranking out rote code, AI-native juniors are now better at spotting anomalies, handling edge cases, and taking on responsibilities that used to land much later in a career. In fact, Harvard research shows juniors in AI-driven teams shoulder 77% more responsibility than before.”

New Interviews trend: From Whiteboards to Take-Homes

While leetcode style interviews are still popular in traditional tech companies, AI Startups use AI-heavy take-homes or Intensive Live Collaboration Session.

Instead of 60-minute whiteboard sessions, some startups now assign multi-day take-home projects that can’t be completed without AI. Candidates must ship a working prototype or improve a messy project under severe time constraints, then walk through their process in a short debrief.

Some other startups use intensive one-hour sessions to work with the candidate to fix a messy project with embedded bugs.

In either case, candidates must ship a working prototype or improve a messy project under severe time constraints, then walk through their process in a short debrief.

The focus is not just what they built but how:

- Problem definition and task breakdown

- prompt adjustments

- error handling,

- iteration steps.

New Evaluation Criteria for AI Engineers

- Success isn’t judged by hit rates or error counts, but by engineers’ subjective sense on how to use AI to tackle the problem or improve their workflow.

- Understanding the LLM Programming concept and use of AI coding and code review tools (Copilot, Cursor, etc.).

- Nuances in context engineering, AI hallucination and limits.

This method filters for people who can truly harness AI tools, not just follow tutorials. But the downside is clear: only about 1 in 10 candidates accept such demanding tasks. Strong candidates may skip the process entirely—unless motivated by referrals or financial compensation. Some startups even pay candidates a partial fee (e.g., $150 for a project worth about $500) to signal fairness.